publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2026

- Preprint

Unified Primitive Proxies for Structured Shape CompletionZhaiyu Chen, Yuqing Wang, and Xiao Xiang Zhu2026

Unified Primitive Proxies for Structured Shape CompletionZhaiyu Chen, Yuqing Wang, and Xiao Xiang Zhu2026Structured shape completion recovers missing geometry as primitives rather than as unstructured points, which enables primitive-based surface reconstruction. Instead of following the prevailing cascade, we rethink how primitives and points should interact, and find it more effective to decode primitives in a dedicated pathway that attends to shared shape features. Following this principle, we present UniCo, which in a single feed-forward pass predicts a set of primitives with complete geometry, semantics, and inlier membership. To drive this unified representation, we introduce primitive proxies, learnable queries that are contextualized to produce assembly-ready outputs. To ensure consistent optimization, our training strategy couples primitives and points with online target updates. Across synthetic and real-world benchmarks with four independent assembly solvers, UniCo consistently outperforms recent baselines, lowering Chamfer distance by up to 50% and improving normal consistency by up to 7%. These results establish an attractive recipe for structured 3D understanding from incomplete data.

@misc{chen2026unico, title = {Unified Primitive Proxies for Structured Shape Completion}, author = {Chen, Zhaiyu and Wang, Yuqing and Zhu, Xiao Xiang}, year = {2026}, eprint = {2601.00759}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, } - TGRS 2026

Reconstructing Building Height from Spaceborne TomoSAR Point Clouds Using a Dual-Topology NetworkZhaiyu Chen, Yuanyuan Wang, Yilei Shi, and Xiao Xiang ZhuIEEE Transactions on Geoscience and Remote Sensing, 2026

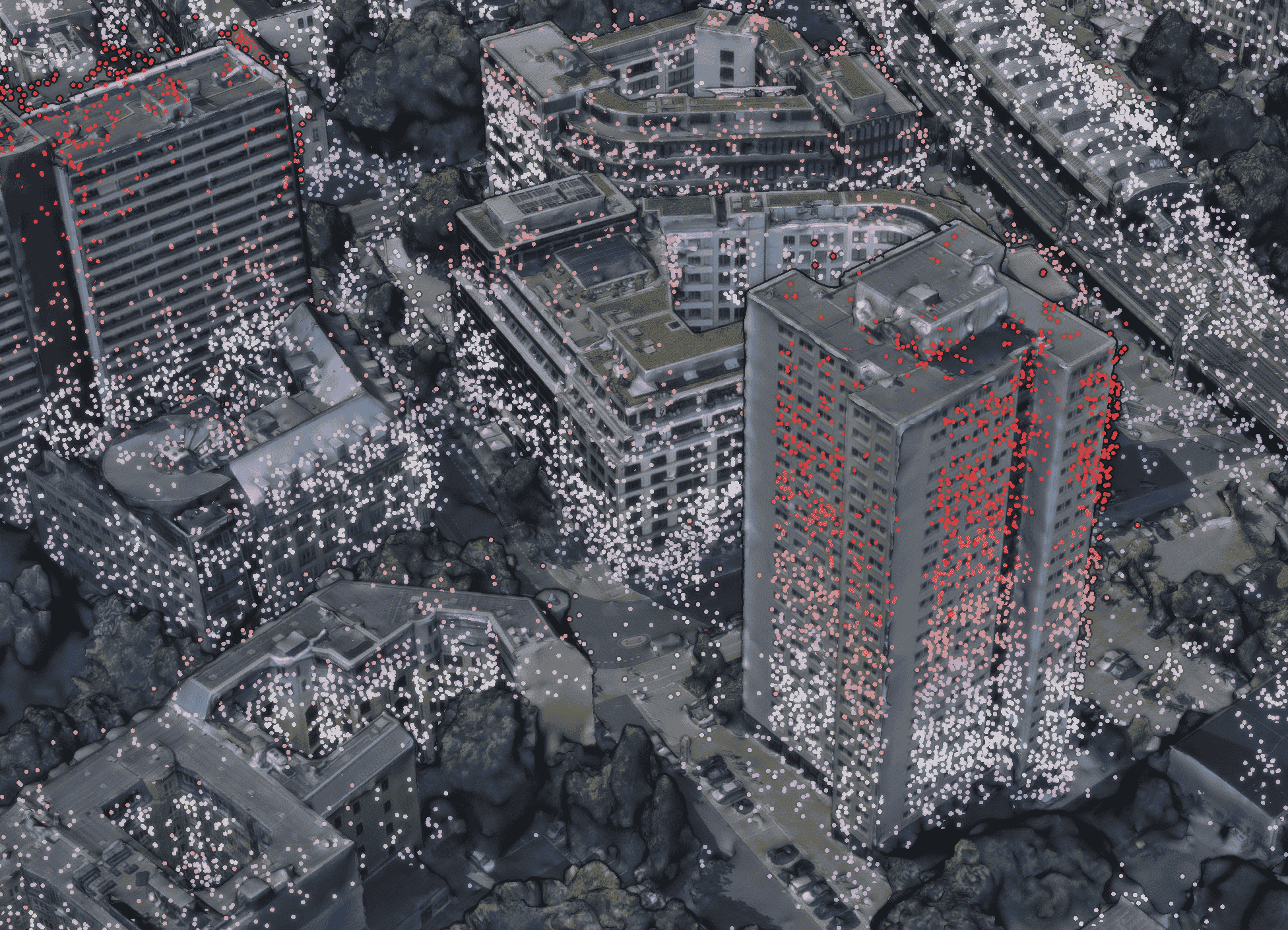

Reconstructing Building Height from Spaceborne TomoSAR Point Clouds Using a Dual-Topology NetworkZhaiyu Chen, Yuanyuan Wang, Yilei Shi, and Xiao Xiang ZhuIEEE Transactions on Geoscience and Remote Sensing, 2026Reliable building height estimation is essential for various urban applications. Spaceborne SAR tomography (TomoSAR) provides weather-independent, side-looking observations that capture facade-level structure, offering a promising alternative to conventional optical methods. However, TomoSAR point clouds often suffer from noise, anisotropic point distributions, and data voids on incoherent surfaces, all of which hinder accurate height reconstruction. To address these challenges, we introduce a learning-based framework for converting raw TomoSAR points into high-resolution building height maps. Our dual-topology network alternates between a point branch that models irregular scatterer features and a grid branch that enforces spatial consistency. By jointly processing these representations, the network denoises the input points and inpaints missing regions to produce continuous height estimates. To our knowledge, this is the first proof of concept for large-scale urban height mapping directly from TomoSAR point clouds. Extensive experiments on data from Munich and Berlin validate the effectiveness of our approach. Moreover, we demonstrate that our framework can be extended to incorporate optical satellite imagery, further enhancing reconstruction quality.

@article{chen2026tomosar2height, title = {Reconstructing Building Height from Spaceborne TomoSAR Point Clouds Using a Dual-Topology Network}, journal = {IEEE Transactions on Geoscience and Remote Sensing}, year = {2026}, author = {Chen, Zhaiyu and Wang, Yuanyuan and Shi, Yilei and Zhu, Xiao Xiang}, } - ISPRS 2026

TUM2TWIN: Introducing the large-scale multimodal urban digital twin benchmark datasetOlaf Wysocki, Benedikt Schwab, Manoj Kumar Biswanath, Michael Greza, Qilin Zhang, Jingwei Zhu, and 28 more authorsISPRS Journal of Photogrammetry and Remote Sensing, 2026

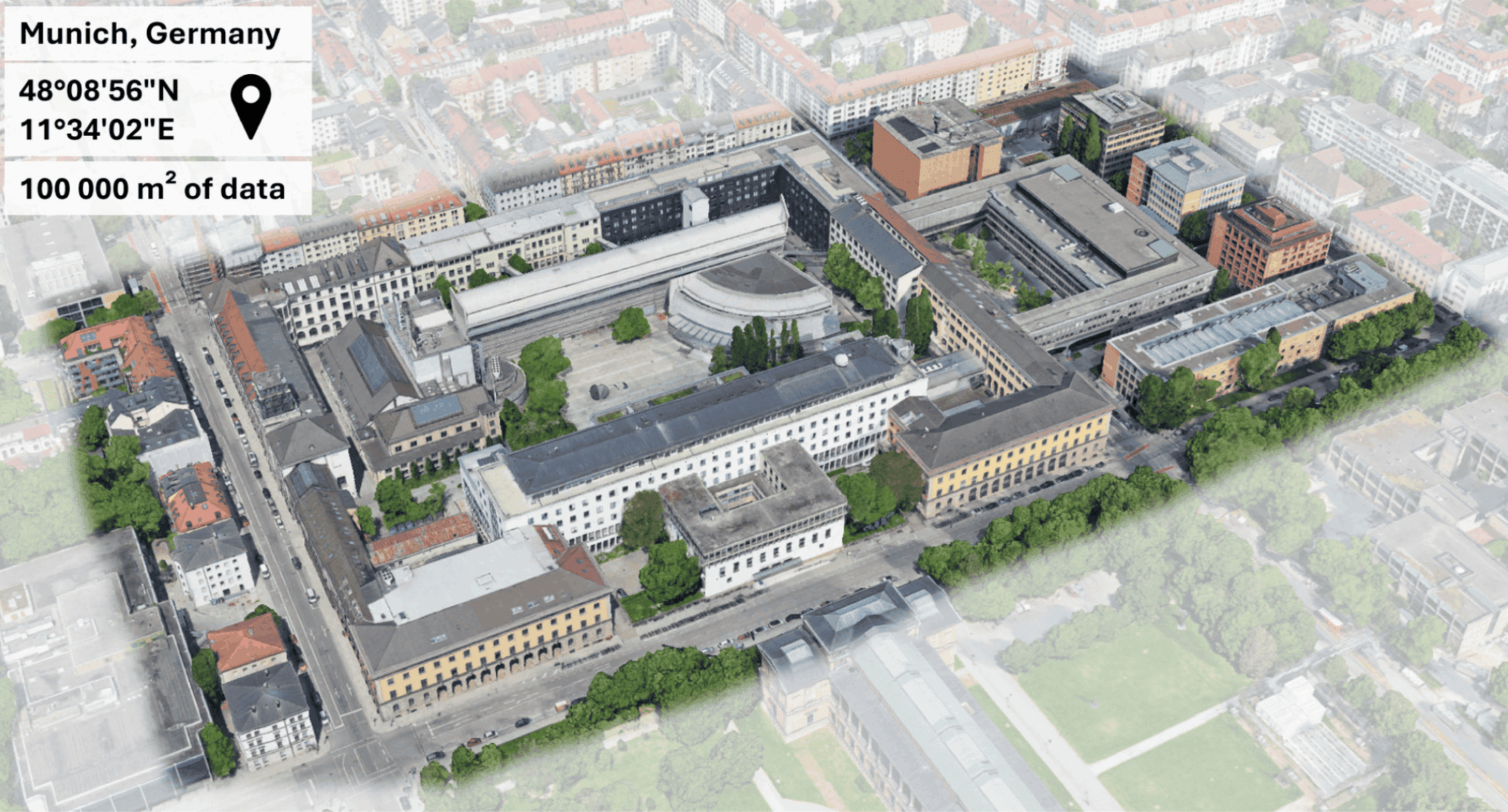

TUM2TWIN: Introducing the large-scale multimodal urban digital twin benchmark datasetOlaf Wysocki, Benedikt Schwab, Manoj Kumar Biswanath, Michael Greza, Qilin Zhang, Jingwei Zhu, and 28 more authorsISPRS Journal of Photogrammetry and Remote Sensing, 2026Urban Digital Twins (UDTs) have become essential for managing cities and integrating complex, heterogeneous data from diverse sources. Creating UDTs involves challenges at multiple process stages, including acquiring accurate 3D source data, reconstructing high-fidelity 3D models, maintaining models’ updates, and ensuring seamless interoperability to downstream tasks. Current datasets are usually limited to one part of the processing chain, hampering comprehensive Urban Digital Twin (UDT)s validation. To address these challenges, we introduce the first comprehensive multimodal Urban Digital Twin benchmark dataset: TUM2TWIN. This dataset includes georeferenced, semantically aligned 3D models and networks along with various terrestrial, mobile, aerial, and satellite observations boasting 32 data subsets over roughly 100,000 m2 and currently 767 GB of data. By ensuring georeferenced indoor–outdoor acquisition, high accuracy, and multimodal data integration, the benchmark supports robust analysis of sensors and the development of advanced reconstruction methods. Additionally, we explore downstream tasks demonstrating the potential of TUM2TWIN, including novel view synthesis of NeRF and Gaussian Splatting, solar potential analysis, point cloud semantic segmentation, and LoD3 building reconstruction. We are convinced this contribution lays a foundation for overcoming current limitations in UDT creation, fostering new research directions and practical solutions for smarter, data-driven urban environments. The project is available under: https://tum2t.win.

@article{wysocki2026tum2twin, title = {TUM2TWIN: Introducing the large-scale multimodal urban digital twin benchmark dataset}, journal = {ISPRS Journal of Photogrammetry and Remote Sensing}, volume = {232}, pages = {810-830}, year = {2026}, issn = {0924-2716}, doi = {10.1016/j.isprsjprs.2025.12.013}, author = {Wysocki, Olaf and Schwab, Benedikt and Biswanath, Manoj Kumar and Greza, Michael and Zhang, Qilin and Zhu, Jingwei and Froech, Thomas and Heeramaglore, Medhini and Hijazi, Ihab and Kanna, Khaoula and Pechinger, Mathias and Chen, Zhaiyu and Sun, Yao and Segura, Alejandro Rueda and Xu, Ziyang and AbdelGafar, Omar and Mehranfar, Mansour and Yeshwanth, Chandan and Liu, Yueh-Cheng and Yazdi, Hadi and Wang, Jiapan and Auer, Stefan and Anders, Katharina and Bogenberger, Klaus and Borrmann, André and Dai, Angela and Hoegner, Ludwig and Holst, Christoph and Kolbe, Thomas H. and Ludwig, Ferdinand and Nießner, Matthias and Petzold, Frank and Zhu, Xiao Xiang and Jutzi, Boris} }

2025

- NeurIPS 2025

Learning Generalizable Shape Completion with SIM(3) EquivarianceYuqing Wang*, Zhaiyu Chen*, and Xiao Xiang ZhuAdvances in Neural Information Processing Systems, 2025

Learning Generalizable Shape Completion with SIM(3) EquivarianceYuqing Wang*, Zhaiyu Chen*, and Xiao Xiang ZhuAdvances in Neural Information Processing Systems, 20253D shape completion methods typically assume scans are pre-aligned to a canonical frame. This leaks pose and scale cues that networks may exploit to memorize absolute positions rather than inferring intrinsic geometry. When such alignment is absent in real data, performance collapses. We argue that robust generalization demands architectural equivariance to the similarity group, SIM(3), so the model remains agnostic to pose and scale. Following this principle, we introduce the first SIM(3)-equivariant shape completion network, whose modular layers successively canonicalize features, reason over similarity-invariant geometry, and restore the original frame. Under a de-biased evaluation protocol that removes the hidden cues, our model outperforms both equivariant and augmentation baselines on the PCN benchmark. It also sets new cross-domain records on real driving and indoor scans, lowering minimal matching distance on KITTI by 17% and Chamfer distance \ell1 on OmniObject3D by 14%. Perhaps surprisingly, ours under the stricter protocol still outperforms competitors under their biased settings. These results establish full SIM(3) equivariance as an effective route to truly generalizable shape completion.

@article{wang2025simeco, title = {Learning Generalizable Shape Completion with SIM(3) Equivariance}, journal = {Advances in Neural Information Processing Systems}, year = {2025}, author = {Wang, Yuqing and Chen, Zhaiyu and Zhu, Xiao Xiang}, } - JOSS 2025

abspy: A Python Package for 3D Adaptive Binary Space Partitioning and ModelingZhaiyu ChenJournal of Open Source Software, 2025

abspy: A Python Package for 3D Adaptive Binary Space Partitioning and ModelingZhaiyu ChenJournal of Open Source Software, 2025Efficient and robust space partitioning of 3D space underpins many applications in computer graphics. abspy is a Python package for adaptive binary space partitioning and 3D modeling. At its core, abspy constructs a plane arrangement by recursively subdividing 3D space with planar primitives to form a linear cell complex that reflects the underlying geometric structure. This adaptive scheme iteratively refines the spatial decomposition, producing a compact representation that supports efficient 3D modeling and analysis. Built on robust arithmetic kernels and interoperable data structures, abspy exposes a high-level Python API that lets researchers prototype advanced 3D pipelines such as surface reconstruction, volumetric analysis, and feature extraction for machine learning.

@article{chen2025abspy, title = {abspy: A Python Package for 3D Adaptive Binary Space Partitioning and Modeling}, journal = {Journal of Open Source Software}, year = {2025}, author = {Chen, Zhaiyu}, doi = {10.21105/joss.07946}, } - CVPR 2025

Parametric Point Cloud Completion for Polygonal Surface ReconstructionZhaiyu Chen, Yuqing Wang, Liangliang Nan, and Xiao Xiang ZhuIEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025

Parametric Point Cloud Completion for Polygonal Surface ReconstructionZhaiyu Chen, Yuqing Wang, Liangliang Nan, and Xiao Xiang ZhuIEEE/CVF Conference on Computer Vision and Pattern Recognition, 2025Existing polygonal surface reconstruction methods heavily depend on input completeness and struggle with incomplete point clouds. We argue that while current point cloud completion techniques may recover missing points, they are not optimized for polygonal surface reconstruction, where the parametric representation of underlying surfaces remains overlooked. To address this gap, we introduce parametric completion, a novel paradigm for point cloud completion, which recovers parametric primitives instead of individual points to convey high-level geometric structures. Our presented approach, PaCo, enables high-quality polygonal surface reconstruction by leveraging plane proxies that encapsulate both plane parameters and inlier points, proving particularly effective in challenging scenarios with highly incomplete data. Comprehensive evaluations of our approach on the ABC dataset establish its effectiveness with superior performance and set a new standard for polygonal surface reconstruction from incomplete data.

@article{chen2025paco, title = {Parametric Point Cloud Completion for Polygonal Surface Reconstruction}, journal = {IEEE/CVF Conference on Computer Vision and Pattern Recognition}, year = {2025}, author = {Chen, Zhaiyu and Wang, Yuqing and Nan, Liangliang and Zhu, Xiao Xiang}, }

2024

- ISPRS 2024

PolyGNN: Polyhedron-based Graph Neural Network for 3D Building Reconstruction from Point CloudsISPRS Journal of Photogrammetry and Remote Sensing, 2024

PolyGNN: Polyhedron-based Graph Neural Network for 3D Building Reconstruction from Point CloudsISPRS Journal of Photogrammetry and Remote Sensing, 2024We present PolyGNN, a polyhedron-based graph neural network for 3D building reconstruction from point clouds. PolyGNN learns to assemble primitives obtained by polyhedral decomposition via graph node classification, achieving a watertight and compact reconstruction. To effectively represent arbitrary-shaped polyhedra in the neural network, we propose a skeleton-based sampling strategy to generate polyhedron-wise queries. These queries are then incorporated with inter-polyhedron adjacency to enhance the classification. PolyGNN is end-to-end optimizable and is designed to accommodate variable-size input points, polyhedra, and queries with an index-driven batching technique. To address the abstraction gap between existing city-building models and the underlying instances, and provide a fair evaluation of the proposed method, we develop our method on a large-scale synthetic dataset with well-defined ground truths of polyhedral labels. We further conduct a transferability analysis across cities and on real-world point clouds. Both qualitative and quantitative results demonstrate the effectiveness of our method, particularly its efficiency for large-scale reconstructions.

@article{chen2024polygnn, title = {PolyGNN: Polyhedron-based Graph Neural Network for 3D Building Reconstruction from Point Clouds}, journal = {ISPRS Journal of Photogrammetry and Remote Sensing}, volume = {218}, pages = {693-706}, year = {2024}, issn = {0924-2716}, doi = {10.1016/j.isprsjprs.2024.09.031}, author = {Chen, Zhaiyu and Shi, Yilei and Nan, Liangliang and Xiong, Zhitong and Zhu, Xiao Xiang}, } - GRSM 2024

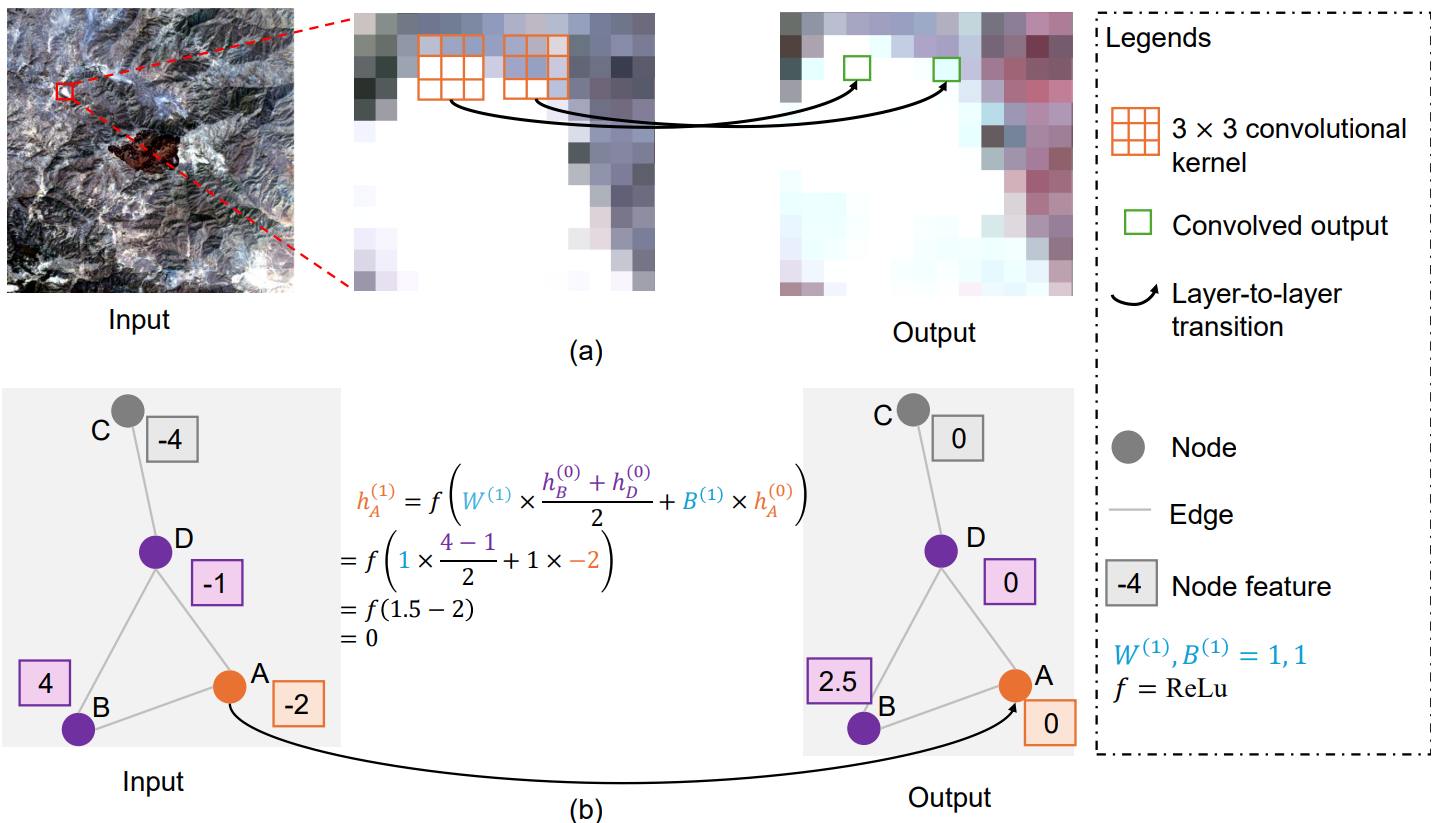

Beyond Grid Data: Exploring Graph Neural Networks for Earth ObservationShan Zhao, Zhaiyu Chen, Zhitong Xiong, Yilei Shi, Sudipan Saha, and Xiao Xiang ZhuIEEE Geoscience and Remote Sensing Magazine, 2024

Beyond Grid Data: Exploring Graph Neural Networks for Earth ObservationShan Zhao, Zhaiyu Chen, Zhitong Xiong, Yilei Shi, Sudipan Saha, and Xiao Xiang ZhuIEEE Geoscience and Remote Sensing Magazine, 2024Earth Observation (EO) data analysis has been significantly revolutionized by deep learning (DL), with applications typically limited to grid-like data structures. Graph Neural Networks (GNNs) emerge as an important innovation, propelling DL into the non-Euclidean domain. Naturally, GNNs can effectively tackle the challenges posed by diverse modalities, multiple sensors, and the heterogeneous nature of EO data. To introduce GNNs in the related domains, our review begins by offering fundamental knowledge on GNNs. Then, we summarize the generic problems in EO, to which GNNs can offer potential solutions. Following this, we explore a broad spectrum of GNNs’ applications to scientific problems in Earth systems, covering areas such as weather and climate analysis, disaster management, air quality monitoring, agriculture, land cover classification, hydrological process modeling, and urban modeling. The rationale behind adopting GNNs in these fields is explained, alongside methodologies for organizing graphs and designing favorable architectures for various tasks. Furthermore, we highlight methodological challenges of implementing GNNs in these domains and possible solutions that could guide future research. While acknowledging that GNNs are not a universal solution, we conclude the paper by comparing them with other popular architectures like transformers and analyzing their potential synergies.

@article{zhao2024gnn, author = {Zhao, Shan and Chen, Zhaiyu and Xiong, Zhitong and Shi, Yilei and Saha, Sudipan and Zhu, Xiao Xiang}, journal = {IEEE Geoscience and Remote Sensing Magazine}, title = {Beyond Grid Data: Exploring Graph Neural Networks for Earth Observation}, year = {2024}, volume = {13}, number = {1}, pages = {175-208}, keywords = {Earth;Data models;Graph neural networks;Monitoring;Meteorology;Reviews;Time series analysis;Point cloud compression;Context modeling;Training}, doi = {10.1109/MGRS.2024.3493972}, } - SIGSPATIAL 2024

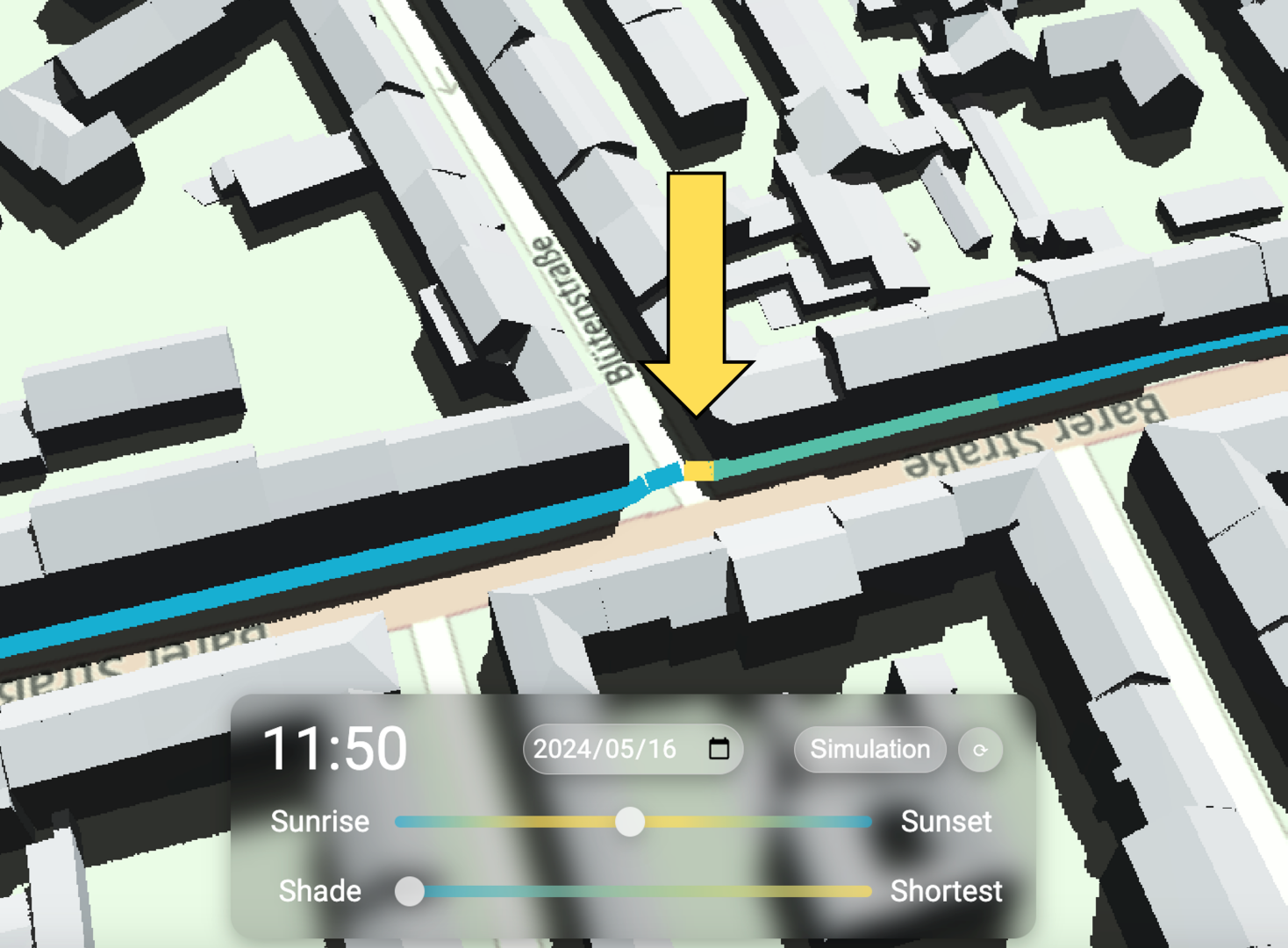

Walking in the Shade: Shadow-oriented Navigation for PedestriansYu Feng, Puzhen Zhang, Jiaying Xue, Zhaiyu Chen, and Liqiu MengIn Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems, Atlanta, GA, USA, 2024

Walking in the Shade: Shadow-oriented Navigation for PedestriansYu Feng, Puzhen Zhang, Jiaying Xue, Zhaiyu Chen, and Liqiu MengIn Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems, Atlanta, GA, USA, 2024Excessive exposure to the sun and the resulting heat poses health risks to pedestrians in hot weather. To mitigate these risks, we propose a shadow-oriented navigation system that offers cooler and more convenient walking routes by simulating shadows. Our system integrates a manually corrected pedestrian network from OpenStreetMap with LoD2 3D city models, using a ray tracing module for real-time shadow simulation. It optimizes routes to be either cooler or shorter based on user preferences, with easy verification through 3D scene visualization. Our navigation system has been implemented in a study area in Munich, Germany, with further discussions on the technical feasibility and challenges of extending it to larger areas.

@inproceedings{feng2024shade, author = {Feng, Yu and Zhang, Puzhen and Xue, Jiaying and Chen, Zhaiyu and Meng, Liqiu}, title = {Walking in the Shade: Shadow-oriented Navigation for Pedestrians}, year = {2024}, isbn = {9798400711077}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, doi = {10.1145/3678717.3691287}, booktitle = {Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems}, pages = {677–680}, numpages = {4}, keywords = {3D city model, Comfort-oriented navigation, Pedestrian navigation, Shadow simulation}, location = {Atlanta, GA, USA}, series = {SIGSPATIAL '24}, } - IGARSS 2024

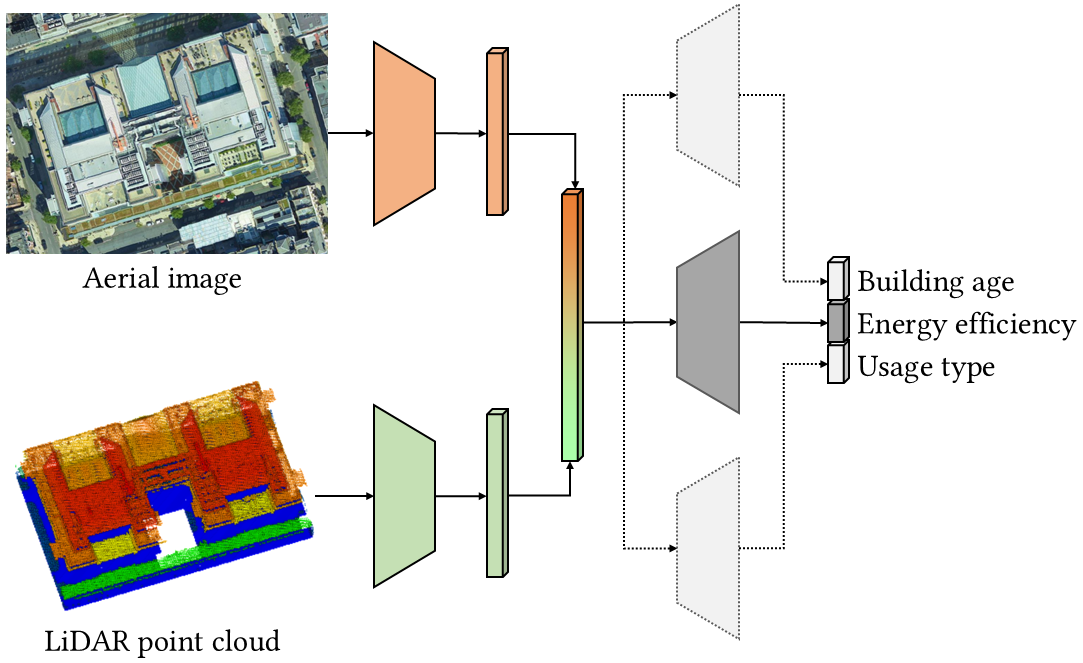

Learning building energy efficiency with semantic attributesZhaiyu Chen, Ziqi Gu, Yilei Shi, and Xiao Xiang ZhuIn IEEE International Geoscience and Remote Sensing Symposium, Jul 2024

Learning building energy efficiency with semantic attributesZhaiyu Chen, Ziqi Gu, Yilei Shi, and Xiao Xiang ZhuIn IEEE International Geoscience and Remote Sensing Symposium, Jul 2024Non-intrusive estimation of building energy efficiency has profound applications in advancing sustainability in the built environment. Recent studies often focus on predicting energy performance alone, neglecting the interplay between the performance and related building semantics. This paper investigates whether incorporating semantic attributes benefits energy efficiency estimation. We develop a neural network to estimate energy efficiency, with building age and usage type as additional supervision for multi-task learning. The neural network processes both aerial imagery and airborne LiDAR data to classify buildings as energy-efficient or inefficient. Our results demonstrate the effectiveness of the superimposed semantics, particularly with building age. With the multi-task model achieving a 63.78% F1 score and outperforming that supervised solely with energy efficiency by 2.86%, this paper reveals the potential of integrating semantic attributes in modeling building energy performance.

@inproceedings{chen2024energy, author = {Chen, Zhaiyu and Gu, Ziqi and Shi, Yilei and Zhu, Xiao Xiang}, booktitle = {IEEE International Geoscience and Remote Sensing Symposium}, title = {Learning building energy efficiency with semantic attributes}, year = {2024}, pages = {909-912}, doi = {10.1109/igarss53475.2024.10642234}, month = jul, }

2022

- ISPRS 2022

Reconstructing Compact Building Models from Point Clouds using Deep Implicit FieldsZhaiyu Chen, Hugo Ledoux, Seyran Khademi, and Liangliang NanISPRS Journal of Photogrammetry and Remote Sensing, 2022

Reconstructing Compact Building Models from Point Clouds using Deep Implicit FieldsZhaiyu Chen, Hugo Ledoux, Seyran Khademi, and Liangliang NanISPRS Journal of Photogrammetry and Remote Sensing, 2022While three-dimensional (3D) building models play an increasingly pivotal role in many real-world applications, obtaining a compact representation of buildings remains an open problem. In this paper, we present a novel framework for reconstructing compact, watertight, polygonal building models from point clouds. Our framework comprises three components: (a) a cell complex is generated via adaptive space partitioning that provides a polyhedral embedding as the candidate set; (b) an implicit field is learned by a deep neural network that facilitates building occupancy estimation; (c) a Markov random field is formulated to extract the outer surface of a building via combinatorial optimization. We evaluate and compare our method with state-of-the-art methods in generic reconstruction, model-based reconstruction, geometry simplification, and primitive assembly. Experiments on both synthetic and real-world point clouds have demonstrated that, with our neural-guided strategy, high-quality building models can be obtained with significant advantages in fidelity, compactness, and computational efficiency. Our method also shows robustness to noise and insufficient measurements, and it can directly generalize from synthetic scans to real-world measurements.

@article{chen2022points2poly, title = {Reconstructing Compact Building Models from Point Clouds using Deep Implicit Fields}, journal = {ISPRS Journal of Photogrammetry and Remote Sensing}, volume = {194}, pages = {58-73}, year = {2022}, issn = {0924-2716}, doi = {10.1016/j.isprsjprs.2022.09.017}, author = {Chen, Zhaiyu and Ledoux, Hugo and Khademi, Seyran and Nan, Liangliang}, }